As a researcher and a HCI enthusiast, I have always wanted to attend a major HCI conference. This year, I have been given an opportunity to visit one. As the CHI 2013, was already over by then, I have searched for other similar conferences and found UIST (User interface Software and Technology) 2013, which turned to be probably even a better choice, given my technical background. Even better, the conference was organized together with one of its offsprings, the ITS (Interactive Tabletops and Surfaces) 2013. So I immediately registered and a month later I have visited St. Andrews in Scotland for both of them. The first conference to take place was ITS 2013, so this blog post will be dedicated to it. I will write a separate post for the UIST 2013.

As the name already makes it clear, the ITS is all about interactive (more or less touch) surfaces. Mostly big surfaces, although there has also been some discussions about mobile devices. The invited speaker was Jeff Han of the Perceptive Pixel (now acquired by Microsoft), which was even more appropriate, as his viral TED talk was more or less the thing that got me into the entire multi-touch table business. He talked about his story, the rise of multi-touch displays and how to start a hardware start-up.

The conference was quite diverse, I was amazed by what kind of crazy ideas do people get. This also got me thinking that the ITS community is quite open-minded (something that I cannot say for most of the computer vision community, that I am observing more frequently). I have yet to empirically verify this observation by submitting a paper, as the acceptance rate is quite low. I will only mention some ideas that really got me interested:

- Using a knife and fork analogy for two finger gestures

- A HTML5 development framework for multi-touch surfaces

- Perceptual grouping and selection of elements

- Combining surfaces with NFC communication

- Investigating piano accords to design multi-touch menus

- Textile displays

- Latency estimation for interactive displays

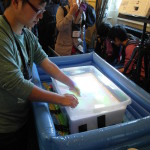

The demo session had more than a dozen different technologies, from prototypes to devices that you can (in theory at least) actually buy. The second generation of Microsoft PixelSense was cool, as well as a three-display Multitaction setup by MultiTouch Ltd. from Finland. There were also some other more wacky ideas, like a water-based display that I do not see beyond a very wet prototype, however, they show (in my opinion at least) the openness of the community. Anyway, here are some photos.