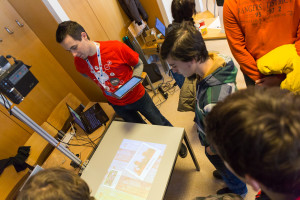

On 14th and 15th of February there were open-days at the Faculty of Computer and Information Science. ViCoS Lab has presented an alternative multi-touch table that uses Kinect sensor to detect fingers and a projector to display the interface on a regular table.

Category Archives: multi-touch

HDCMD: a Clustering Algorithm to Support Hand Detection on Multitouch Displays

Bojan Blažica, Daniel Vladušič & Dunja Mladenić

Abstract

This paper describes our approach to hand detection on a multitouch surface i.e. detecting how many hands are currently on the surface and associating each touch point to its corresponding hand. Our goal was to find a general software-based solution to this problem applicable to all multitouch surfaces regardless of their construction. We therefore approached hand detection with a limited amount of information: the position of each touch point. We propose HDCMD (Hand Detection with Clustering on Multitouch Displays), a simple clustering algorithm based on heuristics that exploit the knowledge of the anatomy of the human hand. The proposed hand detection algorithm’s accuracy evaluated on synthetic data (97%) significantly outperformed XMeans (21%) and DBScan (67%).

[Link – Springer]

Multitouch – not only gestures

Multitouch interaction is usually associated with gestures, but the richness of multitouch data can also be exploited in other ways. This post provides a few examples taken from recent research literature.

In “MTi: A method for user identification for multitouch displays”, we provide an overview of literature concerned with user identification and user distinction on multitouch multi-user displays. State-of-the-art methods are presented by considering three key aspects: user identification, user distinction and user tracking. Next, the paper proposes a method for user identification called MTi, which is capable of user identification based solely on the coordinates of touch points (thus applicable to all multitouch displays). The 5 touch point’s coordinates are first transformed in 29 features (distances, angles and areas), which are then used by an SVM model to perform identification. The method reported 94 % accuracy on a database with 100 users. Additionally, a usability study was performed to see how users react to MTi and to frame its scope.

In “Design and Validation of Two-Handed Multi-Touch Tabletop Controllers for Robot Teleoperation” Micire et al.describe the DREAM (Dynamically Resizing, Ergonomic And Multitouch) controller. The controller is designed for robot teleoperation, a task currently performed with specific joysticks that allow “chording” – the use of multiple fingers on the same hand to manage complex and coordinated movements (of the robot). Due to the lack of physical feedback, multitouch displays have been regarded as inappropriate for such tasks. The authors agree that simply emulating the physical 3D world (and controls) on a flat 2D display is doomed to failure, but at the same time provide an alternative solution. Multitouch controls should be designed around the biomechanical characteristics of each individual’s hand. The point here is that, because multitouch controls are soft/programmable controls, they can adapt to each user individually and not to an average user as physical controls have to. This approach is demonstrated with the DREAM controller (a Playstation controller split in half – each half appears under one of the users hands). The position of the user’s fingers determines the location of the controller as well as its size and functions. In the paper the authors describe how they determine the presence of a user’s hand (hand detection/registration), how they determine which (left/right) hand it is, why their approach does not rely on Cartesian coordinates (rotation insensitiveness) etc.

The next article that explores multitouch data from a non-gesture perspective is “See Me, See You: A Lightweight Method for Discriminating User Touches on Tabletop Displays.” Here, Zhang et al. describe how to discriminate users (the position of the user around a tabletop) based on the orientation of the touch. With data from 8 participants (3072 samples) they build an SVM model with 97,9 % accuracy. For details, see the CHI paper above, the video below or this Msc thesis.

Ewerling et al. suggested a processing pipeline for multitouch detection on large touch screens that combines the use of maximally stable extremal regions and agglomerative clustering in order to detect finger touches, group finger touches into hands and distinguish left and right hand (when all fingers from a single hand touch the display). Their motivation was the fact that existing hardware platforms only detect single touches and assume all belong to the same gesture, which limits the design space of multitouch interaction. The presented solution was evaluated on a diffused illumination display (97 % finger registration accuracy, 92 % hand registration accuracy), but is applicable to all multitouch displays that provide a depth map of the region above the display. For details see the paper “Finger and Hand Detection for Multi-Touch Interfaces Based on Maximally Stable Extremal Regions” or this MSc thesis.

If the above papers present hand and finger registration techniques as part of a broader context, Au and Tai in “Multitouch Finger Registration and Its Applications” provide two use-cases: the palm menu and the virtual mouse (for details see video below). Their method for hand and finger registration depends only on touch coordinates and is thus hardware independent.

FingerScape: Multi-touch User Interface Framework

Intuitive human computer interaction (HCI) is becoming an increasingly popular topic in computer science. The need for intuitive HCI is fueled by the need for intuitive mastering and interaction with increasing complexity of software, amounts of data. Devices, where one or more users intuitively (e.g. with fingers) and simultaneously manage content are currently very rare, expensive (e.g. Microsoft Surface) and usually only provided as technology show-case.

An important advantage of such devices is the possibility of multi-user experience that enables additional ways of inter-user interaction. This makes this kind of systems suited for applications such as multimedia content viewing and browsing (e.g. in museums, galleries, exhibitions, …) and visualization of large quantities of information.

The goal of the project is creation of an open source platform that will ease the development of multi-touch multi-user (MTMU) enabled applications. No such freely available complete solution exists at this time. We believe that such a platform will increase the popularity and production of multi-touch systems as well as enable more rapid development of MTMU enabled applications.

Hardware

As a first step towards our goal we have set up a FTIR-based multitouch table to provide a hardware basis for the development of the platform.

The video below displays the existing demo software (developed by the NUI group) running on our table table as a demonstration of the concept.

The video below displays the existing demo software (developed by the NUI group) running on our table table as a demonstration of the concept.

(old test video)